The INEX feature is not sufficient to protect the integrity of your model. Since the data files are extracted by default after the model files, a malicious user could simply overwrite your model files with his own and thus execute his own code. To protect yourself from this, you should set the protect_model_files flag to true when registering/updating your model.

GAMS Jobs

Introduction

If a GAMS job is to be submitted, you need to provide Engine with the name of the model and the namespace where it should be executed. If the model is not registered in the specified namespace, the model files are also required. Besides these main components of a GAMS job, a number of additional options and files can be specified. A few of them are presented here in more detail. An overview of all available specifications can be found in the technical documentation of the API.

INEX file

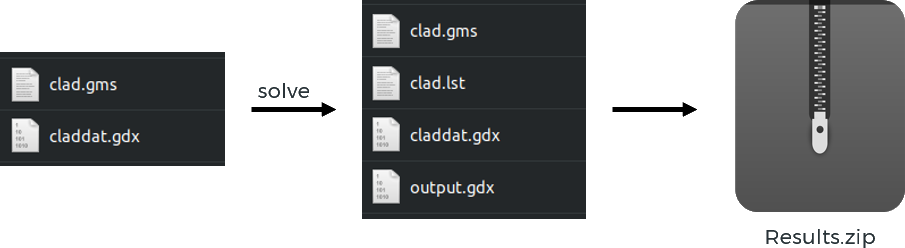

By default, the result files contain all files in the directory where the model was executed. This includes data files, model files, log and listing files as well as all other files that are required for or generated during the model run.

You can use GAMS Engine to restrict the files contained in the results archive. This can be used to reduce the size of the archive. This INEX file has the following schema:

{

"$schema": "http://json-schema.org/draft-04/schema#",

"type": "object",

"properties": {

"type": {

"type": "string",

"enum": [

"include",

"exclude"

]

},

"files": {

"type": "array",

"items": {

"type": "string"

},

"required": [

"items"

]

},

"globbing_enabled": {

"type": "boolean",

"default": true

}

},

"required": [

"type",

"files"

],

"additionalProperties": false

}

Let's assume we register the trnsport model from the GAMS model library. We want users to be able to run this model, but not see our GAMS code. More specifically, we want to exclude all files with the extensions .gms and .inc from the results returned to the users of our model. We therefore register the model with the following INEX file:

{

"type": "exclude",

"files": ["*.gms", "*.inc"]

}

When globbing_enabled is true, it enables the glob patterns (sometimes also referred to as "wildcards"). This field is optional and it is set to true when omitted. If you want to specify filenames exactly and do not need this feature you can set it to false.

Here is an example inex file where the globbing is disabled:

{

"type": "exclude",

"files": ["trnsport.gms", "mydata.inc"],

"globbing_enabled": false

}

You can specify an INEX file both when registering a new model and when submitting a job. When you submit a new job for a registered model that already contains an INEX file, the results are first filtered against that file. Only after that, the INEX file sent with the job will be applied. This means that when you submit a new job, you cannot remove restrictions that are associated with the registered model.

Text/Stream entries

When executing a job, you can register both so-called text entries and stream entries. These can be any UTF-8 encoded text files generated during your model run. In the case of stream entries the contents of these files can be streamed while your job is running. Text entries can only be displayed after your job is complete. This feature is useful if you want to view certain files while your job is running (e.g. solvetrace files) or before downloading the (possibly extensive) result archive.

While text entries can be retrieved multiple times via the GET endpoint /jobs/{token}/text-entry/{entry_name}, the contents of stream entries are pushed into a FIFO (first-in, first-out) queue. The DELETE endpoint /jobs/{token}/stream-entry/{entry_name} can be used to empty the queue and retrieve the content.

Note that the maximum size for text entries is 10 MB and can be configured via the /configuration endpoint (admins only). Text entries should not be used for very large text files.

The GAMS log file (process's standard output) is a special type of stream entry. Thus, it has its own endpoint: /jobs/{token}/unread-logs.

Hypercube jobs

In addition to a normal GAMS job, GAMS Engine also supports so-called Hypercube jobs. These are essentially a collection of normal jobs with varying command line arguments. Why is this useful? Imagine a GAMS model that runs through a number of different scenarios. The model is always initialized by the same large GDX file, but behaves differently depending on double dash parameter --scenId. In this situation you want to avoid uploading the same large GDX file for each scenario. Instead, you want to upload it once and create a number of jobs with different command line arguments: a Hypercube job.

To spawn a new Hypercube job, you need to use the POST /hypercube/ endpoint. The only difference to posting a normal job is a so-called Hypercube description file. This is a JSON file that describes your Hypercube job. This Hypercube description file has the following schema:

{

"$schema": "http://json-schema.org/draft-04/schema#",

"type": "object",

"properties": {

"jobs": {

"type": "array",

"items": {

"type": "object",

"properties": {

"id": {

"type": "string",

"minLength": 1,

"maxLength": 255,

"pattern": "^[0-9a-zA-Z_\-. ]+$"

},

"arguments": {

"type": "array",

"items": {

"type": "string"

},

"minItems": 1

}

},

"required": [

"id",

"arguments"

]

},

"required": [

"items"

],

"minItems": 1

}

},

"required": [

"jobs"

]

}

With this in mind, our example described above could result in the following Hypercube description file:

{

"jobs": [

{

"id": "first scenario",

"arguments": ["--scenId=1"]

},

{

"id": "second scenario",

"arguments": ["--scenId=2"]

},

{

"id": "third scenario",

"arguments": ["--scenId=3"]

}

]

}

This would result in the creation of three GAMS jobs with different values for --scenId. The id of a job can be any string. However, it must be unique among the different jobs of a Hypercube job and is used as the directory name where the results are stored. The content of the result archive of the Hypercube job described above would look like this (results are filtered via INEX file):

.

├── first\ scenario

│ └── results.gdx

├── second\ scenario

│ └── results.gdx

└── third\ scenario

└── results.gdx

3 directories, 3 files

Job dependencies

Jobs can be dependent on other jobs. This means the following:

- A dependent job is not started until all of its prerequisites are finished.

- The workspace of a job with dependencies is initialized by extracting the results of the preceding jobs in the order you specify.

While the first point is fairly straightforward, the second is best explained with a small example. Suppose we have a job c that depends on jobs a and b. Before job c is started, first the result archive of job a is extracted, then that of job b and finally the model and job data of job c. If a results.gdx file exists in both a and b, the one from job b overwrites the one from job a. Thus, the order in which you specify the dependencies is important.

A common case where this feature is very useful is pre/post processing. You may have a long-running job that generates a large amount of results. However, you may not be interested in all of these results. Rather, you want to run analyses on parts of the results and then also retrieve only the part of the results you are interested in at the moment. Using a post-processing job with a dependency on the main job along with INEX files allows you to do just that.

Prerequisite jobs must be accessible at the time of submission (i.e., it must be your own job, an invitee's job, or a job shared with you).

Job priorities Engine One

Jobs can be assigned a priority. This way, a job may be run before another job even if this other job was submitted earlier. Note that this only affects the order in the queue; already running jobs are not evicted. GAMS Engine supports three priority levels: low, medium and high. Essentially, a total of three FIFO queues will be created - one for each priority level - and workers will first receive jobs from the high priority queue, then from the medium and then from the low queue. This means that starvation can occur: if a job has low priority and users keep sending medium or high priority jobs, the low priority job may wait indefinitely.

A priority can be assigned to a job when submitting it (via the POST /jobs/ endpoint). If no priority is explicitly specified, the medium priority is assigned.

The job priorities feature is disabled by default and is currently only supported on Engine One. To enable the feature via the Engine UI, navigate to the Administration view and click the Enable job priorities button (or use the PATCH /configuration endpoint and set job_priorities_access to ENABLED). Please note that this feature should only be enabled/disabled when the system is idle, i.e. when no jobs are running or being submitted. Otherwise, jobs may end up in an undefined state.

Job priorities are currently not supported for Hypercube jobs.