Table of Contents

This tutorial explains how to use the GAMS Connect agents SQLReader and SQLWriter to exchange data between GAMS and various database management systems (DBMS). Both agents allow to specify the connectionType that will be used to connect to a DBMS. The connection option allows to specify a dictionary with the required connection information to access the DBMS.

In this tutorial, we will provide guidance on how to connect to each of the supported DBMS (MS-Access, MySQL, Postgres, SQLite and SQL Server) using the corresponding DBMS-specific connection types. The generic connection types, pyodbc and sqlalchemy, allow to Connect to various DBMS (e.g. Oracle) and are covered in independent sections of the tutorial - refer to Data Exchange using PyODBC and Data Exchange using SQLAlchemy.

The connection type sqlalchemy allows to connect to various DBMS by providing the appropriate dialect and driver in the connection dictionary. The connection type pyodbc allows to connect to any DBMS that provides an ODBC (Open Database Connectivity) driver. It also allows to connect through a Data Source Name (DSN), which allows hiding sensitive connection information. Note that for both generic connection types, pyodbc and sqlalchemy, the user is responsible for ensuring that the required database drivers are installed on the system. The DBMS-specific connection types often provide a superior performance and in most cases do not require additional driver installation, as they are already included in the GAMS distribution.

This tutorial is based on the trnsport model from the GAMS model library. Consider the parameters of the trnsport model already stored in various DBMS for the SQLReader to import. After solving the trnsport model, the SQLWriter exports the model results to each DBMS.

Data Exchange using PyODBC

PyODBC is a Python library that allows to connect to any DBMS that provides an ODBC (Open Database Connectivity) driver. If the appropriate ODBC drivers are installed on the system, PyODBC can serve as a bridge to facilitate data transfer between GAMS and various DBMS. PyODBC is also capable of connecting to a database through a Data Source Name (DSN) that allows to hide sensitive connection information. If a DSN is configured, it can be provided in the connection dictionary.

- Note

- For Windows systems, the required

pyodbcPython library is already included in the GAMS distribution. For non-Windows systems, the user is responsible for installing pyodbc and handling any other dependencies. One can either extend GMSPython or use an external Python 3 installation that provides the required libraries.

Below, we present an example that connects to MySQL using connection type pyodbc and a configured DSN.

Import from MySQL

Consider the following example that imports data from a local MySQL database using the SQLReader agent with connectionType: pyodbc:

Set i 'canning plants', j 'markets';

Parameter d(i<,j<) 'distance in thousands of miles'

a(i) 'capacity of plant i in cases'

b(j) 'demand at market j in cases';

Scalar f 'freight in dollars per case per thousand miles';

$onEmbeddedCode Connect:

- SQLReader:

connection: {"DSN": "mysqlodbc"}

# The following connection dictionary is also valid

# connection: {"user":"root", "password": "strong_password", "port": "3306", "database": "testdb", "driver": "MySQL ODBC 8.0 ANSI Driver"}

connectionType: pyodbc

symbols:

- name: d

query: "SELECT * FROM d;"

- name: a

query: "SELECT * FROM a;"

- name: b

query: "SELECT * FROM b;"

- name: f

query: "SELECT * FROM f;"

- GAMSWriter:

symbols: all

$offEmbeddedCode

display i, j, d, a, b, f;

[...]

The MySQL database contains four tables with data for the parameters d, a, b and f as shown here.

The example uses compile-time embedded Connect code to import the data from the MySQL database into GAMS. The SQLReader agent reads the data from the MySQL database using the configured DSN and creates the corresponding parameters in the Connect database. The GAMSWriter agent then writes all parameters to the GAMS database outside of the embedded Connect code. Note that the sets i and j are implicitly defined through the parameter d.

Export to MySQL

Consider the following example that exports data to a local MySQL database using the SQLWriter agent with connectionType: pyodbc:

[...]

Model transport / all /;

solve transport using lp minimizing z;

embeddedCode Connect:

- GAMSReader:

symbols:

- name: x

- Projection:

name: x.l(i,j)

newName: x_level(i,j)

- SQLWriter:

connection: {"DSN": "mysqlodbc"}

# The following connection dictionary is also valid

# connection: {"user":"root", "password": "strong_password", "port": "3306", "database": "testdb", "driver": "MySQL ODBC 8.0 ANSI Driver"}

connectionType: pyodbc

columnEncloser: "`"

ifExists: replace

symbols:

- name: x_level

tableName: xLevel

endEmbeddedCode

After solving the model, execution-time embedded Connect code is used to export the results to the MySQL database. The GAMSReader agent reads variable x from the GAMS database into the Connect database. The Projection agent is used to create the new parameter x_level that contains only the level values of variable x. Finally, the parameter x_level is written to the table xLevel in the MySQL database using the SQLWriter agent.

- Note

- When connecting to a database via

connectionType: pyodbc, the SQLWriter option columnEncloser can be used to specify the character to enclose column names. Since MySQL uses a backtick (`) as the delimiter to enclose column names, we setcolumnEncloser: "`"in the example.

Here is how table xLevel looks like after exporting parameter x_level:

mysql> select * from xLevel; +-----------+----------+-------+ | i | j | value | +-----------+----------+-------+ | seattle | new-york | 50 | | seattle | chicago | 300 | | seattle | topeka | 0 | | san-diego | new-york | 275 | | san-diego | chicago | 0 | | san-diego | topeka | 275 | +-----------+----------+-------+ 6 rows in set (0.00 sec)

Data Exchange using SQLAlchemy

SQLAlchemy is a Python library that allows to connect to various DBMS by providing the appropriate dialect and driver via drivername: <dialect+driver> in the connection dictionary.

- Note

- The user is responsible that the required database drivers are installed on the system. Following database drivers are already included in the GAMS distribution: psycopg2, pymssql, pymysql and pyodbc (on Windows only). If additional Python libraries need to be installed, one can either extend GMSPython or use an external Python 3 installation that provides the required libraries.

Below, we present an example that connects to MySQL using connection type sqlalchemy and drivername: 'mysql+pymysql'. Refer to the documentation of the SQLReader option connection for examples of connection dictionaries for other databases (check out the list of dialects under sqlalchemy).

Import from MySQL

Consider the following example that imports data from a local MySQL database using the SQLReader agent with connectionType: sqlalchemy:

Set i 'canning plants', j 'markets';

Parameter d(i<,j<) 'distance in thousands of miles'

a(i) 'capacity of plant i in cases'

b(j) 'demand at market j in cases';

Scalar f 'freight in dollars per case per thousand miles';

$onEmbeddedCode Connect:

- SQLReader:

connection: {'drivername': 'mysql+pymysql', 'username': 'root', 'password': 'strong_password', 'host':'localhost', 'port': 3306, 'database': 'testdb'}

connectionType: sqlalchemy

symbols:

- name: d

query: "SELECT * FROM d;"

- name: a

query: "SELECT * FROM a;"

- name: b

query: "SELECT * FROM b;"

- name: f

query: "SELECT * FROM f;"

- GAMSWriter:

symbols: all

$offEmbeddedCode

display i, j, d, a, b, f;

[...]

The MySQL database contains four tables with data for the parameters d, a, b and f as shown here.

The example uses compile-time embedded Connect code to import the data from the MySQL database into GAMS. The SQLReader agent reads the data from the MySQL database and creates the corresponding parameters in the Connect database. The GAMSWriter agent then writes all parameters to the GAMS database outside of the embedded Connect code. Note that the sets i and j are implicitly defined through the parameter d.

Export to MySQL

Consider the following example that exports data to a local MySQL database using the SQLWriter agent with connectionType: sqlalchemy:

[...]

Model transport / all /;

solve transport using lp minimizing z;

embeddedCode Connect:

- GAMSReader:

symbols:

- name: x

- Projection:

name: x.l(i,j)

newName: x_level(i,j)

- SQLWriter:

connection: {'drivername': 'mysql+pymysql', 'username': 'root', 'password': 'strong_password', 'host':'localhost', 'port': 3306, 'database': 'testdb'}

connectionType: sqlalchemy

ifExists: replace

symbols:

- name: x_level

tableName: xLevel

endEmbeddedCode

After solving the model, execution-time embedded Connect code is used to export the results to the MySQL database. The GAMSReader agent reads variable x from the GAMS database into the Connect database. The Projection agent is used to create the new parameter x_level that contains only the level values of variable x. Finally, the parameter x_level is written to the table xLevel in the MySQL database using the SQLWriter agent.

Here is how table xLevel looks like after exporting parameter x_level:

mysql> select * from xLevel; +-----------+----------+-------+ | i | j | value | +-----------+----------+-------+ | seattle | new-york | 50 | | seattle | chicago | 300 | | seattle | topeka | 0 | | san-diego | new-york | 275 | | san-diego | chicago | 0 | | san-diego | topeka | 275 | +-----------+----------+-------+ 6 rows in set (0.00 sec)

Data Exchange with MS-Access

Microsoft Access (MS-Access), is a proprietary relational DBMS from Microsoft. It is a member of the Microsoft Office system and a file-based database system. This makes it a platform specific (Windows only) DBMS.

- Note

- The connectivity to MS-Access databases is available on Windows only and requires a 64-bit MS-Access ODBC driver. See connection for more information.

The Connect agents SQLReader and SQLWriter provide three possible connection types to connect to an MS-Access database file (*.mdb, *.accdb): access, pyodbc and sqlalchemy. Both connection types, access and pyodbc, utilize the pyodbc Python library to connect to MS-Access. However, connection type access provides faster insertion methods compared to the generic pyodbc connection, making it the more efficient option for exporting data.

Below, we present an example using the connection type access. Refer to the sections Data Exchange using PyODBC and Data Exchange using SQLAlchemy for examples on how to connect using pyodbc and sqlalchemy.

Import from MS-Access

Consider the following example that imports data from a MS-Access database file using the SQLReader agent with connectionType: access:

Set i 'canning plants', j 'markets';

Parameter d(i<,j<) 'distance in thousands of miles'

a(i) 'capacity of plant i in cases'

b(j) 'demand at market j in cases';

Scalar f 'freight in dollars per case per thousand miles';

$onEmbeddedCode Connect:

- SQLReader:

connection: {'DRIVER':'Microsoft Access Driver (*.mdb, *.accdb)', 'DBQ': 'D:\Projects\FileBasedDBs\transport_data.accdb'}

connectionType: access

symbols:

- name: d

query: "SELECT * FROM d;"

- name: a

query: "SELECT * FROM a;"

- name: b

query: "SELECT * FROM b;"

- name: f

query: "SELECT * FROM f;"

- GAMSWriter:

symbols: all

$offEmbeddedCode

display i, j, d, a, b, f;

[...]

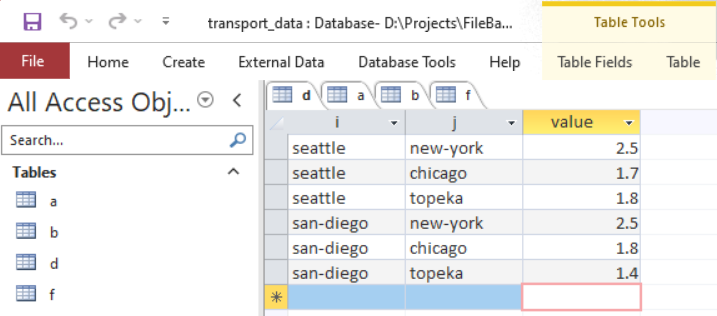

The MS-Access database file transport_data.accdb contains four tables with data for the parameters d, a, b and f:

The example uses compile-time embedded Connect code to import the data from the MS-Access database file transport_data.accdb into GAMS. The SQLReader agent reads the data from the MS-Access database file and creates the corresponding parameters in the Connect database. The GAMSWriter agent then writes all parameters to the GAMS database outside of the embedded Connect code. Note that the sets i and j are implicitly defined through the parameter d. Embedded Connect code is also available at execution-time, see the example in the following section Export to MS-Access.

Export to MS-Access

Consider the following example that exports data to a MS-Access database file using the SQLWriter agent with connectionType: access:

[...]

Model transport / all /;

solve transport using lp minimizing z;

embeddedCode Connect:

- GAMSReader:

symbols:

- name: x

- Projection:

name: x.l(i,j)

newName: x_level(i,j)

- SQLWriter:

connection: {'DRIVER':'Microsoft Access Driver (*.mdb, *.accdb)', 'DBQ': 'D:\Projects\FileBasedDBs\transport_data.accdb'}

connectionType: access

ifExists: replace

symbols:

- name: x_level

tableName: xLevel

endEmbeddedCode

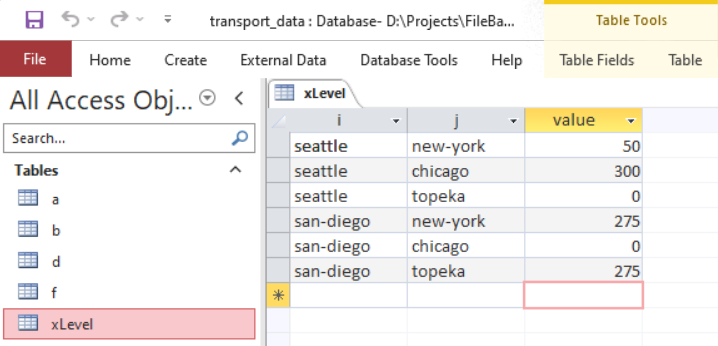

After solving the model, execution-time embedded Connect code is used to export the results to the MS-Access database file transport_data.accdb. The GAMSReader agent reads variable x from the GAMS database into the Connect database. The Projection agent is used to create the new parameter x_level that contains only the level values of variable x. Finally, the parameter x_level is written to the table xLevel in the MS-Access database file using the SQLWriter agent. Note that if the database file does not already exist at the export location, the SQLWriter creates a new database file at the path specified in the DBQ parameter of the connection dictionary.

Here is how the database file looks like after exporting parameter x_level to table xLevel:

The SQLWriter with connectionType: access provides two insertion methods for writing to the MS-Access database file: default (default) and bulkInsert. See also insertMethod in the SQLWriter documentation. The default insertion method used in the example above is well suited for exporting small and medium sized data. The bulkInsert insertion method allows for faster insertion when exporting large amounts of data. It creates a temporary CSV file and then imports the file into the database.

- Note

- Use

connectionType: accesswith optioninsertMethod: bulkInsertwhen exporting large amounts of data to a MS-Access database file.

Data Exchange with MySQL

MySQL is a free and open-source relational DBMS. The Connect agents, SQLReader and SQLWriter, provide three possible connection types to communicate with a MySQL database: mysql, pyodbc and sqlalchemy. The DBMS-specific connection type mysql offers enhanced performance compared to the generic connection types pyodbc and sqlalchemy. This is achieved by utilizing the specialized pymysql Python library, which streamlines communication with MySQL specifically.

Below, we present an example using the connection type mysql. Refer to the sections Data Exchange using PyODBC and Data Exchange using SQLAlchemy for examples on how to connect using pyodbc and sqlalchemy.

Import from MySQL

Consider the following example that imports data from a local MySQL database using the SQLReader agent with connectionType: mysql:

Set i 'canning plants', j 'markets';

Parameter d(i<,j<) 'distance in thousands of miles'

a(i) 'capacity of plant i in cases'

b(j) 'demand at market j in cases';

Scalar f 'freight in dollars per case per thousand miles';

$onEmbeddedCode Connect:

- SQLReader:

connection: {'user': 'root', 'password': 'strong_password', 'port': 3306, 'database': 'testdb', 'host': 'localhost'}

connectionType: mysql

symbols:

- name: d

query: "SELECT * FROM d;"

- name: a

query: "SELECT * FROM a;"

- name: b

query: "SELECT * FROM b;"

- name: f

query: "SELECT * FROM f;"

- GAMSWriter:

symbols: all

$offEmbeddedCode

display i, j, d, a, b, f;

[...]

MySQL has a command-line client which allows to inspect the tables in the database. The MySQL database contains four tables with data for the parameters d, a, b and f:

mysql> select * from d; +-----------+----------+-------+ | i | j | value | +-----------+----------+-------+ | seattle | new-york | 2.5 | | seattle | chicago | 1.7 | | seattle | topeka | 1.8 | | san-diego | new-york | 2.5 | | san-diego | chicago | 1.8 | | san-diego | topeka | 1.4 | +-----------+----------+-------+ 6 rows in set (0.00 sec) mysql> select * from a; +-----------+-------+ | i | value | +-----------+-------+ | seattle | 350 | | san-diego | 600 | +-----------+-------+ 2 rows in set (0.00 sec) mysql> select * from b; +----------+-------+ | j | value | +----------+-------+ | new-york | 325 | | chicago | 300 | | topeka | 275 | +----------+-------+ 3 rows in set (0.00 sec) mysql> select * from f; +-------+ | value | +-------+ | 90 | +-------+ 1 row in set (0.00 sec)

The example uses compile-time embedded Connect code to import the data from the MySQL database into GAMS. The SQLReader agent reads the data from the MySQL database and creates the corresponding parameters in the Connect database. The GAMSWriter agent then writes all parameters to the GAMS database outside of the embedded Connect code. Note that the sets i and j are implicitly defined through the parameter d. Embedded Connect code is also available at execution-time, see the example in the following section Export to MySQL.

Export to MySQL

Consider the following example that exports data to a local MySQL database using the SQLWriter agent with connectionType: mysql:

[...]

Model transport / all /;

solve transport using lp minimizing z;

embeddedCode Connect:

- GAMSReader:

symbols:

- name: x

- Projection:

name: x.l(i,j)

newName: x_level(i,j)

- SQLWriter:

connection: {'user': 'root', 'password': 'strong_password', 'port': 3306, 'database': 'testdb', 'host': 'localhost'}

connectionType: mysql

ifExists: replace

symbols:

- name: x_level

tableName: xLevel

endEmbeddedCode

After solving the model, execution-time embedded Connect code is used to export the results to the MySQL database. The GAMSReader agent reads variable x from the GAMS database into the Connect database. The Projection agent is used to create the new parameter x_level that contains only the level values of variable x. Finally, the parameter x_level is written to the table xLevel in the MySQL database using the SQLWriter agent.

Here is how table xLevel looks like after exporting parameter x_level:

mysql> select * from xLevel; +-----------+----------+-------+ | i | j | value | +-----------+----------+-------+ | seattle | new-york | 50 | | seattle | chicago | 300 | | seattle | topeka | 0 | | san-diego | new-york | 275 | | san-diego | chicago | 0 | | san-diego | topeka | 275 | +-----------+----------+-------+ 6 rows in set (0.00 sec)

The SQLWriter with connectionType: mysql provides two insertion methods for writing to a MySQL database: default (default) and bulkInsert. See also insertMethod in the SQLWriter documentation. The default insertion method used in the example above is well suited for exporting small and medium sized data. The bulkInsert insertion method allows for faster insertion when exporting large amounts of data. It creates a temporary CSV file and then imports the file into the MySQL database using the LOAD DATA LOCAL INFILE query that allows to read text into tables at very high speed.

- Note

- Use

connectionType: mysqlwith optioninsertMethod: bulkInsertwhen exporting large amounts of data to the MySQL database. Note that the optionLOCAL_INFILEmust be enabled on the server.

Data Exchange with Postgres

PostgreSQL, also known as Postgres, is a free and open-source relational DBMS. The Connect agents SQLReader and SQLWriter provide three possible connection types to connect to a Postgres database: postgres, pyodbc and sqlalchemy. The DBMS-specific connection type postgres utilizes the specialized psycopg2 Python library, providing significant performance gains over the generic connection types pyodbc and sqlalchemy.

Below, we present an example using the connection type postgres. Refer to the sections Data Exchange using PyODBC and Data Exchange using SQLAlchemy for examples on how to connect using pyodbc and sqlalchemy.

Import from Postgres

Consider the following example that imports data from a Postgres database using the SQLReader agent with connectionType: postgres:

Set i 'canning plants', j 'markets';

Parameter d(i<,j<) 'distance in thousands of miles'

a(i) 'capacity of plant i in cases'

b(j) 'demand at market j in cases';

Scalar f 'freight in dollars per case per thousand miles';

$onEmbeddedCode Connect:

- SQLReader:

connection: {'user': 'postgres', 'password': 'strong_password', 'port': 5432, 'database': 'testdb', 'host': 'localhost'}

connectionType: postgres

symbols:

- name: d

query: "SELECT * FROM d;"

- name: a

query: "SELECT * FROM a;"

- name: b

query: "SELECT * FROM b;"

- name: f

query: "SELECT * FROM f;"

- GAMSWriter:

symbols: all

$offEmbeddedCode

display i, j, d, a, b, f;

[...]

Postgres has an interactive terminal front-end psql which allows to inspect the tables in the database. The Postgres database contains four tables with data for the parameters d, a, b and f:

postgres=# \connect testdb

You are now connected to database "testdb" as user "postgres".

testdb=# select * from public.d;

i | j | value

-----------+----------+-------

seattle | new-york | 2.5

seattle | chicago | 1.7

seattle | topeka | 1.8

san-diego | new-york | 2.5

san-diego | chicago | 1.8

san-diego | topeka | 1.4

(6 rows)

testdb=# select * from public.a;

i | value

-----------+-------

seattle | 350

san-diego | 600

(2 rows)

testdb=# select * from public.b;

j | value

----------+-------

new-york | 325

chicago | 300

topeka | 275

(3 rows)

testdb=# select * from public.f;

value

-------

90

(1 row)

The example uses compile-time embedded Connect code to import the data from the Postgres database into GAMS. The SQLReader agent reads the data from the Postgres database and creates the corresponding parameters in the Connect database. The GAMSWriter agent then writes all parameters to the GAMS database outside of the embedded Connect code. Note that the sets i and j are implicitly defined through the parameter d. Embedded Connect code is also available at execution-time, see the example in the following section Export to Postgres.

Export to Postgres

Consider the following example that exports data to a Postgres database using the SQLWriter agent with connectionType: postgres:

[...]

Model transport / all /;

solve transport using lp minimizing z;

embeddedCode Connect:

- GAMSReader:

symbols:

- name: x

- Projection:

name: x.l(i,j)

newName: x_level(i,j)

- SQLWriter:

connection: {'user': 'postgres', 'password': 'strong_password', 'port': 5432, 'database': 'testdb', 'host': 'localhost'}

connectionType: postgres

ifExists: replace

symbols:

- name: x_level

tableName: xLevel

endEmbeddedCode

After solving the model, execution-time embedded Connect code is used to export the results to the Postgres database. The GAMSReader agent reads variable x from the GAMS database into the Connect database. The Projection agent is used to create the new parameter x_level that contains only the level values of variable x. Finally, the parameter x_level is written to the table xLevel in the Postgres database using the SQLWriter agent.

Here is how table xLevel looks like after exporting parameter x_level:

testdb=# select * from public.xLevel;

i | j | value

-----------+----------+-------

seattle | new-york | 50

seattle | chicago | 300

seattle | topeka | 0

san-diego | new-york | 275

san-diego | chicago | 0

san-diego | topeka | 275

(6 rows)

The SQLWriter with connectionType: postgres provides two insertion methods for writing to a Postgres database: default (default) and bulkInsert. See also insertMethod in the SQLWriter documentation. The default insertion method used in the example above is well suited for exporting small and medium sized data. The bulkInsert insertion method allows for faster insertion when exporting large amounts of data. It utilizes the copy_expert method of the psycopg2 Python library to efficiently insert large amounts of data into a table of the Postgres database.

- Note

- Use

connectionType: postgreswith optioninsertMethod: bulkInsertwhen exporting large amounts of data to the Postgres database.

Data Exchange with SQLite

SQLite is a lightweight, file-based DBMS. The Connect agents SQLReader and SQLWriter provide three possible connection types to connect to a SQLite database: sqlite, pyodbc and sqlalchemy. The DBMS-specific connection type sqlite utilizes the specialized sqlite3 Python library, providing significant performance gains over the generic connection types pyodbc and sqlalchemy.

The following section presents an example that uses the connection type sqlite. Refer to the sections Data Exchange using PyODBC and Data Exchange using SQLAlchemy for examples on how to connect using pyodbc and sqlalchemy.

Import from SQLite

Consider the following example that imports data from a local SQLite database file using the SQLReader agent with connectionType: sqlite (default):

Set i 'canning plants', j 'markets';

Parameter d(i<,j<) 'distance in thousands of miles'

a(i) 'capacity of plant i in cases'

b(j) 'demand at market j in cases';

Scalar f 'freight in dollars per case per thousand miles';

$onEmbeddedCode Connect:

- SQLReader:

connection: {'database': 'D:\Projects\FileBasedDBs\transport_data.db'}

symbols:

- name: d

query: "SELECT * FROM d;"

- name: a

query: "SELECT * FROM a;"

- name: b

query: "SELECT * FROM b;"

- name: f

query: "SELECT * FROM f;"

- GAMSWriter:

symbols: all

$offEmbeddedCode

display i, j, d, a, b, f;

[...]

The sqlite3 command-line tool allows to inspect the tables in the SQLite database file transport_data.db. It contains four tables with data for the parameters d, a, b and f:

SQLite version 3.8.5 2014-06-04 14:06:34 Enter ".help" for usage hints. sqlite> .headers on sqlite> .mode column sqlite> SELECT * FROM d; i j value ---------- ---------- ---------- seattle new-york 2.5 seattle chicago 1.7 seattle topeka 1.8 san-diego new-york 2.5 san-diego chicago 1.8 san-diego topeka 1.4 sqlite> SELECT * FROM a; i value ---------- ---------- seattle 350.0 san-diego 600.0 sqlite> SELECT * FROM b; j value ---------- ---------- new-york 325.0 chicago 300.0 topeka 275.0 sqlite> SELECT * FROM f; value ---------- 90.0 sqlite>

The example uses compile-time embedded Connect code to import the data from the SQLite database file into GAMS. The SQLReader agent reads the data from the SQLite database file and creates the corresponding parameters in the Connect database. The GAMSWriter agent then writes all parameters to the GAMS database outside of the embedded Connect code. Note that the sets i and j are implicitly defined through the parameter d. Embedded Connect code is also available at execution-time, see the example in the following section Export to SQLite.

Export to SQLite

Consider the following example that exports data to a SQLite database file using the SQLWriter agent with connectionType: sqlite (default):

[...]

Model transport / all /;

solve transport using lp minimizing z;

embeddedCode Connect:

- GAMSReader:

symbols:

- name: x

- Projection:

name: x.l(i,j)

newName: x_level(i,j)

- SQLWriter:

connection: {'database': 'D:\Projects\FileBasedDBs\transport_data.db'}

ifExists: replace

symbols:

- name: x_level

tableName: xLevel

endEmbeddedCode

After solving the model, execution-time embedded Connect code is used to export the results to the SQLite database file transport_data.db. The GAMSReader agent reads variable x from the GAMS database into the Connect database. The Projection agent is used to create the new parameter x_level that contains only the level values of variable x. Finally, the parameter x_level is written to the table xLevel in the SQLite database file using the SQLWriter agent. Note that if the database file does not already exist at the export location, the SQLWriter creates a new database file at the path specified in the database parameter of the connection dictionary.

Here is how table xLevel looks like after exporting parameter x_level:

sqlite> SELECT * FROM xLevel; i j value ---------- ---------- ---------- seattle new-york 50.0 seattle chicago 300.0 seattle topeka 0.0 san-diego new-york 275.0 san-diego chicago 0.0 san-diego topeka 275.0 sqlite>

Export Using SqliteWrite

An alternative way to export data to a SQLITE database file is to use SqliteWrite from the GAMS Tools Library. Consider the following example that utilizes SqliteWrite to export the variable x to a new SQLite database file output.db.

[...]

Model transport / all /;

solve transport using lp minimizing z;

executeTool "sqlitewrite ids=x o=output.db";

After solving the model, the GAMS tool SqliteWrite is used during execution-time to export the variable x to a new SQLite database file output.db. The tool stores all the attributes of the variable x in a new table of the same name x.

Here is how the table x is stored in the database file.

sqlite> .headers ON sqlite> .mode column sqlite> SELECT * FROM x; i j level marginal lower upper scale ---------- ---------- ---------- ---------- ---------- ---------- ---------- seattle new-york 50.0 0.0 0.0 Inf 1.0 seattle chicago 300.0 0.0 0.0 Inf 1.0 seattle topeka 0.0 0.036 0.0 Inf 1.0 san-diego new-york 275.0 0.0 0.0 Inf 1.0 san-diego chicago 0.0 0.00900000 0.0 Inf 1.0 san-diego topeka 275.0 0.0 0.0 Inf 1.0 sqlite>

Data Exchange with SQL Server

SQL Server is a proprietary relational DBMS developed by Microsoft. The Connect agents SQLReader and SQLWriter provide three possible connection types to connect to a SQL Server database: sqlserver, pyodbc and sqlalchemy. The DBMS-specific connection type sqlserver utilizes the specialized pymssql Python library, providing enhanced performance compared to generic connection types sqlalchemy and pyodbc.

Below, we present an example using the connection type sqlserver. Refer to the sections Data Exchange using PyODBC and Data Exchange using SQLAlchemy for examples on how to connect using pyodbc and sqlalchemy.

Import from SQLServer

Consider the following example that imports data from a SQL Server database using the SQLReader agent with connectionType: sqlserver:

Set i 'canning plants', j 'markets';

Parameter d(i<,j<) 'distance in thousands of miles'

a(i) 'capacity of plant i in cases'

b(j) 'demand at market j in cases';

Scalar f 'freight in dollars per case per thousand miles';

$onEmbeddedCode Connect:

- SQLReader:

connection: {'user': 'sa', 'password': 'strong_password', 'database': 'master', 'host': 'VJGAMS'}

connectionType: sqlserver

symbols:

- name: d

query: "SELECT * FROM d;"

- name: a

query: "SELECT * FROM a;"

- name: b

query: "SELECT * FROM b;"

- name: f

query: "SELECT * FROM f;"

- GAMSWriter:

symbols: all

$offEmbeddedCode

display i, j, d, a, b, f;

[...]

Microsoft SQL Server provides a command-line tool, sqlcmd, which allows to inspect the tables in the database. The SQL Server database contains four tables with data for the parameters d, a, b and f:

>sqlcmd -s "|" -W 1> select * from d; 2> go i|j|value -|-|----- seattle|new-york|2.5 seattle|chicago|1.7 seattle|topeka|1.8 san-diego|new-york|2.5 san-diego|chicago|1.8 san-diego|topeka|1.3999999999999999 (6 rows affected) 1> select * from a; 2> go i|value -|----- seattle|350.0 san-diego|600.0 (2 rows affected) 1> select * from b; 2> go j|value -|----- new-york|325.0 chicago|300.0 topeka|275.0 (3 rows affected) 1> select * from f; 2> go value ----- 90.0 (1 rows affected) 1>

The example uses compile-time embedded Connect code to import the data from the SQL Server database into GAMS. The SQLReader agent reads the data from the SQL Server database and creates the corresponding parameters in the Connect database. The GAMSWriter agent then writes all parameters to the GAMS database outside of the embedded Connect code. Note that the sets i and j are implicitly defined through the parameter d. Embedded Connect code is also available at execution-time, see the example in the following section Export to SQL Server.

Export to SQL Server

Consider the following example that exports data to a SQL Server database using the SQLWriter agent with connectionType: sqlserver:

[...]

Model transport / all /;

solve transport using lp minimizing z;

embeddedCode Connect:

- GAMSReader:

symbols:

- name: x

- Projection:

name: x.l(i,j)

newName: x_level(i,j)

- SQLWriter:

connection: {'user': 'sa', 'password': 'strong_password', 'database': 'master', 'host': 'VJGAMS'}

connectionType: sqlserver

ifExists: replace

symbols:

- name: x_level

tableName: xLevel

endEmbeddedCode

After solving the model, execution-time embedded Connect code is used to export the results to the SQL Server database. The GAMSReader agent reads variable x from the GAMS database into the Connect database. The Projection agent is used to create the new parameter x_level that contains only the level values of variable x. Finally, the parameter x_level is written to the table xLevel in the SQL Server database using the SQLWriter agent.

Here is how table xLevel looks like after exporting parameter x_level:

>sqlcmd -s "|" -W 1> select * from xLevel; 2> go i|j|value -|-|----- seattle|new-york|50.0 seattle|chicago|300.0 seattle|topeka|0.0 san-diego|new-york|275.0 san-diego|chicago|0.0 san-diego|topeka|275.0 (6 rows affected)

The SQLWriter with connectionType: sqlserver provides three insertion methods for writing to a SQL Server database: default (default), bulkInsert and bcp. See also insertMethod in the SQLWriter documentation. The default insertion method used in the example above is well suited for exporting small and medium sized data. The bulkInsert insertion method allows for faster insertion when exporting large amounts of data. It creates a temporary CSV file and then imports the file into the SQL Server database using the BULK INSERT query. However, for this insert method to work, the database server must be running locally since the temporary CSV file cannot be created on a remote server.

- Note

- Use

connectionType: sqlserverwith optioninsertMethod: bulkInsertwhen exporting large amounts of data to a local SQL Server database.

Another method to insert large amounts of data that is also available for remote SQL Server databases is the bcp insertion method based on the bulk copy program (bcp) utility of SQL Server.

- Note

- Use

connectionType: sqlserverwith optioninsertMethod: bcpwhen exporting large amounts of data to a SQL Server database. In order to use the insert methodbcp, the ODBC driver for SQL Server and the bcp utility must be installed on the system. For more information on installing both requirements, refer to the official bcp utility documentation.